How are the confidence intervals calculated

There are confidence intervals on the model parameters, and confidence intervals on the plots. This document explains how both are calculated. The confidence intervals on the plots use the confidence intervals on the model parameters in the procedure that is explained in the second section of this document. Before reading this document, you should have a reasonable understanding of how Maximum Likelihood Estimation (MLE) works and also understand what partial derivatives are and how to calculate them.

Confidence intervals on the parameters

Once the model parameters have been found (whether by Least Squares Estimation or Maximum Likelihood Estimation), we can use these parameters along with the data and the log-likelihood function, to calculate the hessian matrix. We can use autograd.differential_operators.hessian to calculate the hessian matrix, which is a square matrix of partial derivatives. The inverse of the hessian matrix gives us the covariance matrix, which contains the numbers we need for finding the confidence intervals. The covariance matrix is a matrix containing the variance of the parameters along the diagonal, and the covariance of the parameters outside of the diagonal. The size of the covariance matrix will match the number of parameters. For the Weibull Distribution (with parameters \(\alpha\) and \(\beta\)), the covariance matrix looks like this:

To find the confidence intervals on the parameters, we need the parameters, the standard error \(\left(\sqrt{variance}\right)\), and the desired confidence interval. For this example, we will use the default of 95% confidence (two sided). The formulas for the confidence intervals on the parameters have two forms, depending on whether they are strictly positive (like \(\alpha\) and \(\beta\) are for the Weibull Distribution) or unbounded (like \(\mu\) is for the Normal Distribution).

For strictly positive parameters, the formula is:

\(X_{lower} = \hat{X} . {\rm e}^{-Z.\frac{\hat{X}_{SE}}{\hat{X}}}\)

\(X_{upper} = \hat{X} . {\rm e}^{+Z.\frac{\hat{X}_{SE}}{\hat{X}}}\)

For unbounded parameters (which can be positive or negative), the formula is:

\(X_{lower} = \hat{X} - Z.\hat{X}_{SE}\)

\(X_{upper} = \hat{X} + Z.\hat{X}_{SE}\)

Where:

Z = Standard Normal Distribution Quantile of \(\frac{1 - CI}{2}\). This can be calculated using

-scipy.stats.norm.ppf((1 - CI) / 2). For CI = 0.95, Z = 1.95996. If finding a 1 sided interval, don’t divide by 2 in the formula.\(\hat{X}_{SE}\) = The standard error \(\left(\sqrt{Var(\hat{X})}\right)\).

\(X_{lower}\) = The lower confidence bound on the parameter based on the specified confidence interval (CI).

\(X_{upper}\) = The upper confidence bound on the parameter based on the specified confidence interval (CI).

If you would like to calculate these values yourself, you can check your result with reliability like this:

from reliability.Fitters import Fit_Weibull_2P

import scipy.stats as ss

import numpy as np

CI = 0.8

data = [43, 81, 41, 44, 52, 99, 64, 25, 41, 7]

fit = Fit_Weibull_2P(failures=data, CI=CI, show_probability_plot=False, print_results=False)

Z = -ss.norm.ppf((1 - CI) / 2)

print('Z =', Z)

print('alpha =',fit.alpha)

print('alpha_SE =',fit.alpha_SE)

alpha_upper = fit.alpha * (np.exp(Z * (fit.alpha_SE / fit.alpha)))

print('alpha_upper from formula =',alpha_upper)

print('alpha_upper from Fitter =',fit.alpha_upper)

'''

Z = 1.2815515655446004

alpha = 55.842280377094696

alpha_SE = 9.098820692892856

alpha_upper from formula = 68.8096875640737

alpha_upper from Fitter = 68.8096875640737

'''

There are a few exceptions to the above formulas for confidence intervals on the parameters. For three parameter distributions (such as Weibull_3P), the mathematics somewhat breaks down, requiring a minor modification. The bounds for \(\alpha\) and \(\beta\) are calculated using the Weibull_2P log-likelihood function and the bounds on \(\gamma\) are calculated using the Weibull_3P log-likelihood function. This method is used by reliability and reliasoft’s Weibull++ software.

In the case of the Exponential Distribution, the covariance matrix is a 1 x 1 matrix containing just \(Var(\lambda)\). The upper and lower bounds on \(\lambda\) are found using the formula above for strictly positive parameters.

Some matrices are non-invertable due to their values. While rare, if it occurs for the hessian matrix, it means that the inverse of the hessian matrix cannot be calculated so the covariance matrix is not able to be obtained. In such cases, a warning will be printed by reliability, the standard errors will be set to 0 and the upper and lower bounds of the parameters will match the parameters.

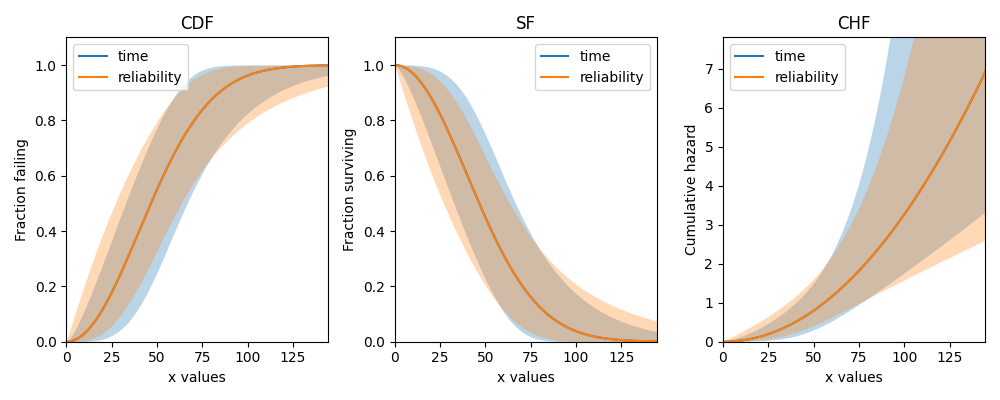

Confidence intervals on the plots

The confidence intervals on the plots (usually called confidence bounds) are available for the CDF, SF, and CHF. It is not possible to calculate confidence intervals for the PDF or HF. There are two types of confidence bounds, these are bounds on time (Type I) and bounds on reliability (Type II). Depending on the amount of data, these bounds may be almost the same (for large sample sizes) or quite different (for small sample sizes). The following example shows the differences in these bounds for the CDF, SF, and CHF.

from reliability.Fitters import Fit_Weibull_2P

import matplotlib.pyplot as plt

data = [43, 81, 41, 44, 52, 99, 64, 25, 41, 7]

fit = Fit_Weibull_2P(failures=data, show_probability_plot=False, print_results=False)

plt.figure(figsize=(10,4))

plt.subplot(131)

fit.distribution.CDF(CI_type='time',label='time')

fit.distribution.CDF(CI_type='reliability',label='reliability')

plt.title('CDF')

plt.legend()

plt.subplot(132)

fit.distribution.SF(CI_type='time',label='time')

fit.distribution.SF(CI_type='reliability',label='reliability')

plt.title('SF')

plt.legend()

plt.subplot(133)

fit.distribution.CHF(CI_type='time',label='time')

fit.distribution.CHF(CI_type='reliability',label='reliability')

plt.title('CHF')

plt.legend()

plt.tight_layout()

plt.show()

For larger values of CI (the default is 0.95), the distance between the solid line and the confidence bounds will increase.

Due to the relationship between the CDF, SF, and CHF, we only need to calculate the confidence bounds on the SF and we can use a few simple transformations to obtain the bounds for the CDF and CHF.

Bounds on time

The formulas for the confidence bounds on time (T) for the Weibull Distribution can be obtained as follows:

Begin with the equation for the SF: \(\qquad R = {\rm e}^{-(\frac{T}{\alpha })^ \beta }\)

Linearize the equation: \(\qquad ln(-ln(R)) = \beta.(\ln(T)-ln(\alpha))\)

Rearrange to make T the subject: \(\qquad ln(T) = \frac{1}{\beta}ln(-ln(R))+ln(\alpha)\)

Substitute \(u = ln(T)\): \(\qquad u = \frac{1}{\beta}ln(-ln(R))+ln(\alpha)\)

The upper and lower bounds on \(u\) are:

\(u_U = \hat{u} + Z.\sqrt{Var(\hat{u})}\).

\(u_L = \hat{u} - Z.\sqrt{Var(\hat{u})}\).

You’ll notice that this is the same formula for the bounds on the parameters (when unbounded) provided in the previous section. The formula for Z is also listed in the previous section.

Here’s the tricky part. We need to find \(Var(\hat{u})\). The formula for this comes from something called the Delta Method which states that:

\(\operatorname{Var} \left(h_r \right) = \sum_i \left( \frac{\partial h_r}{\partial B_i} \right)^2 \operatorname{Var}\left( B_i \right) + \sum_i \sum_{j \neq i} \left( \frac{ \partial h_r }{ \partial B_i } \right) \left( \frac{ \partial h_r }{ \partial B_j } \right) \operatorname{Cov}\left( B_i, B_j \right)\)

Applying this to \(u = \frac{1}{\beta}ln(-ln(R))+ln(\alpha)\) gives:

Since we made the substitution \(u = ln(T)\), we can obtain the upper and lower bounds on \(T\) using the reverse of this substitution:

\(T_U = {\rm exp}(u_U)\)

\(T_L = {\rm exp}(u_L)\)

The result we have produced will accept a value from the SF (a reliability between 0 and 1) and output the corresponding upper and lower times. It tells us that we can be 95% certain that the system reliability (R) will be reached somewhere between \(T_L\) and \(T_U\) (if CI=0.95).

Bounds on reliability

Beginning with the linearized equation for the SF: \(\qquad ln(-ln(R)) = \beta.(\ln(T)-ln(\alpha))\)

We make \(R\) the subject, which it already is (yay!) so no rearranging needed.

Now substitute \(u = ln(-ln(R))\): \(\qquad u = \beta.(\ln(T)-ln(\alpha))\)

As with the bounds on time, the bounds on reliability in terms of \(u\) are:

\(u_U = \hat{u} + Z.\sqrt{Var(\hat{u})}\).

\(u_L = \hat{u} - Z.\sqrt{Var(\hat{u})}\).

This time we have a different formula for \(\operatorname{Var} \left(u \right)\). Using the delta method on \(u = \beta.(\ln(T)-ln(\alpha))\) we can derive the following expression:

Once we have the full expression for \(u\) we need to make the reverse substitution:

\(R_U = {\rm exp}\left(-{\rm exp}\left(u_U\right)\right)\)

\(R_L = {\rm exp}\left(-{\rm exp}\left(u_L\right)\right)\)

The result we have produced will accept any time value (T) and will output the bounds on reliability (R) between 0 and 1 at that corresponding time. It tells us that we can be 95% certain that the reliability of the system lies between \(R_L\) and \(R_U\) (if CI=0.95) at the specified time.

How are the confidence bounds calculated using Python

The above derivations are tedious and become extremely difficult for more complicated equations (such as the Gamma Distribution). Within reliability the linearized forms of the SF (in terms of time and reliability) are specified, and then the partial derivatives are calculated using autograd.jacobian. In code (for bounds on time) it looks like this:

# weibull SF rearranged for t with v = ln(t)

def v(R, alpha, beta):

return (1 / beta) * anp.log(-anp.log(R)) + anp.log(alpha)

dv_da = jacobian(v, 1) # derivative w.r.t. alpha

dv_db = jacobian(v, 2) # derivative w.r.t. beta

def var_v(self, u): # u is reliability

return (

dv_da(u, self.alpha, self.beta) ** 2 * self.alpha_SE ** 2

+ dv_db(u, self.alpha, self.beta) ** 2 * self.beta_SE ** 2

+ 2 * dv_da(u, self.alpha, self.beta) * dv_db(u, self.alpha, self.beta) * self.Cov_alpha_beta

)

# v is ln(t) and Y is reliability

v_lower = v(Y, self.alpha, self.beta) - Z * (var_v(self, Y) ** 0.5)

v_upper = v(Y, self.alpha, self.beta) + Z * (var_v(self, Y) ** 0.5)

# transform back from v = ln(t)

t_lower = np.exp(v_lower)

t_upper = np.exp(v_upper)

There are several other challenges to getting Python to do this correctly, such as where to incorporate \(\gamma\) for location shifted distributions, how to distribute the points so they look smooth, how to correct for things (like reversals in the bounds) that are mathematically correct but practically (in the world of reliability engineering) incorrect, and how to correctly transform the bounds on the SF to get the bounds on the CDF or CHF. Some distributions (such as the Gamma Distribution) are particularly difficult and require a slightly different method to that which is explained above.

How can I extract the confidence bounds from the plot

For bounds on time, this can be done using the quantiles option in the fitter as shown below in option 1. Alternatively, once we have the fitted distribution object, we can extract values from bounds on time or reliability directly from the CDF, SF, or CHF. This is shown below in option 2. Multiple examples of the second method are provided in the document on working with fitted distributions.

from reliability.Fitters import Fit_Weibull_2P

import matplotlib.pyplot as plt

data = [43, 81, 41, 44, 52, 99, 64, 25, 41, 7]

q = [0.2, 0.3, 0.4]

# option 1 using quantiles argument

fit = Fit_Weibull_2P(failures=data, show_probability_plot=False, print_results=False, CI=0.95, quantiles=q)

lower = fit.quantiles['Lower Estimate']

point = fit.quantiles['Point Estimate']

upper = fit.quantiles['Upper Estimate']

fit.distribution.CDF()

# option 2 extracting values directly from the CDF

lower2, point2, upper2 = fit.distribution.CDF(CI_y=q,show_plot=False)

plt.scatter(lower, q, color='blue')

plt.scatter(point, q, color='purple')

plt.scatter(upper, q, color='red')

plt.scatter(lower2, q, color='white',marker='x')

plt.scatter(point2, q, color='lime',marker='x')

plt.scatter(upper2, q, color='yellow',marker='x')

plt.show()

Lastly, for bounds on either time or reliability, the Other_functions.crosshairs function provides an interactive set of crosshairs which can be used to find the values using the mouse.

A demo of how this works is shown here.

Further reading

More information and formulas are available in the following references from weibull.com, reliawiki and reliasoft: